继续Open edX docker生产环境的探索

这篇文章记录将Open edX部署到Kubernetes上的踩坑笔记

我对docker比较熟悉,但对Kubernetes不熟。开始之前,我简单翻阅了Kubernetes Handbook——Kubernetes中文指南/云原生应用架构实践手册,对Kubernetes有了基本的了解

openedx-docker已经制作了Open edX的Compose模板文件,也预编译了所需的镜像。那么如何将docker-compose管理的应用迁移到Kubernetes呢?kompose是我们需要的工具

kompose takes a Docker Compose file and translates it into Kubernetes resources.

kompose getting-started

于是,我们便从getting-started开始

首先跟着Minikube and Kompose的引导,安装Kompose很顺利,brew install kompose就行,但Minikube没有成功启动,原因要从google服务器下载东西,翻墙时常中断。折腾得很烦,放弃。

安装Minikube的目的是让我们在本地运行Kubernetes

第二条路是使用Minishift,同样是网络问题。如果你的minishift运行正常,也可以试试部署arnold

安装minikube和minishift,很简单:

brew cask install minikubebrew cask install minishift

但启动他们都需要拉取镜像,镜像在国外,网络不稳定。

后来发现docker-for-mac已经支持Kubernetes,如果准备在本地做实验,可以试试这个,参考基于 Docker for MAC 的 Kubernetes 本地环境搭建与应用部署

我最终使用阿里云的K8S服务。考虑到网络流行性,选择了香港节点,开通,在阿里云创建K8S集群很简单,按照官方引导就行,基本不会有问题。我使用默认的参数配置。按需付费。用完就删

我的集群信息如下:

aliyun-k8s

创建好集群后,通过 kubectl 连接 Kubernetes 集群。在mac下安装kubectl很简单:

brew install kubernetes-cli

之后按照阿里云引导,将配置文件内容复制到本机 ~/.kube/config

配置完成后,即可以使用 kubectl 从本地计算机访问 Kubernetes 集群。

集群信息

1

2

3

4

5

6

7

|

➜ /tmp kubectl cluster-info

Kubernetes master is running at https://47.91.175.41:6443

Heapster is running at https://47.91.175.41:6443/api/v1/namespaces/kube-system/services/heapster/proxy

KubeDNS is running at https://47.91.175.41:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

monitoring-influxdb is running at https://47.91.175.41:6443/api/v1/namespaces/kube-system/services/monitoring-influxdb/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

|

从本地访问阿里云的Kubernetes集群既已正常,我们就开始往上边部署容器应用。

继续回到getting-started的引导,开始第一个hello world案例

1

2

3

|

wget https://raw.githubusercontent.com/kubernetes/kompose/master/examples/docker-compose.yaml

kompose convert

kompose up

|

应用已经成功部署到k8s上:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

➜ /tmp kubectl describe svc frontend

Name: frontend

Namespace: default

Labels: io.kompose.service=frontend

Annotations: kompose.cmd=kompose up

kompose.service.type=LoadBalancer

kompose.version=1.13.0 ()

Selector: io.kompose.service=frontend

Type: LoadBalancer

IP: 172.19.2.193

LoadBalancer Ingress: 47.91.175.137

Port: 80 80/TCP

TargetPort: 80/TCP

NodePort: 80 32678/TCP

Endpoints: 172.16.1.136:80

Session Affinity: None

External Traffic Policy: Cluster

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal EnsuringLoadBalancer 6m service-controller Ensuring load balancer

Normal EnsuredLoadBalancer 6m service-controller Ensured load balancer

|

试试获取其他信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

➜ /tmp kubectl get deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

frontend 1 1 1 1 5m

redis-master 1 1 1 1 5m

redis-slave 1 1 1 1 5m

➜ /tmp kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

frontend LoadBalancer 172.19.2.193 47.91.175.137 80:32678/TCP 6m

kubernetes ClusterIP 172.19.0.1 <none> 443/TCP 1h

redis-master ClusterIP 172.19.1.162 <none> 6379/TCP 6m

redis-slave ClusterIP 172.19.2.20 <none> 6379/TCP 6m

|

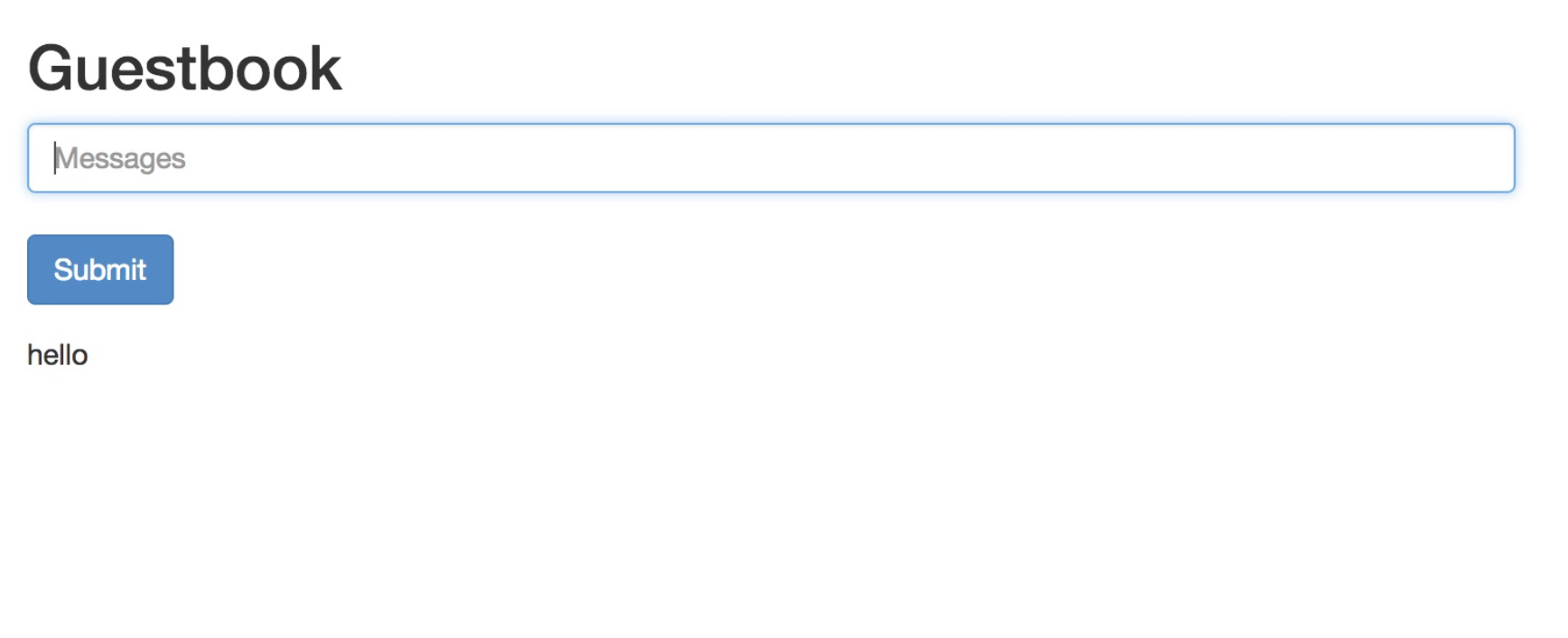

访问 http://47.91.175.137/ ,成功!

你可以终止它:kompose down,和使用docker-compose很相似

接着我们试着将openedx-docker部署到k8s

kompose convert之后:

1

2

3

4

5

6

7

8

9

10

11

12

13

|

➜ openedx-docker git:(master) ✗ ls

LICENSE.txt data lms-worker-deployment.yaml rabbitmq-claim0-persistentvolumeclaim.yaml

Makefile docker-compose-android.yml memcached-deployment.yaml rabbitmq-deployment.yaml

README.md docker-compose.yml mongodb-claim0-persistentvolumeclaim.yaml smtp-deployment.yaml

android elasticsearch-claim0-persistentvolumeclaim.yaml mongodb-deployment.yaml xqueue

cms-claim0-persistentvolumeclaim.yaml elasticsearch-deployment.yaml mysql-claim0-persistentvolumeclaim.yaml xqueue-claim0-persistentvolumeclaim.yaml

cms-claim1-persistentvolumeclaim.yaml forum mysql-deployment.yaml xqueue-claim1-persistentvolumeclaim.yaml

cms-deployment.yaml forum-deployment.yaml nginx-claim0-persistentvolumeclaim.yaml xqueue-consumer-claim0-persistentvolumeclaim.yaml

cms-worker-claim0-persistentvolumeclaim.yaml lms-claim0-persistentvolumeclaim.yaml nginx-claim1-persistentvolumeclaim.yaml xqueue-consumer-claim1-persistentvolumeclaim.yaml

cms-worker-claim1-persistentvolumeclaim.yaml lms-claim1-persistentvolumeclaim.yaml nginx-claim2-persistentvolumeclaim.yaml xqueue-consumer-deployment.yaml

cms-worker-deployment.yaml lms-deployment.yaml nginx-deployment.yaml xqueue-deployment.yaml

config lms-worker-claim0-persistentvolumeclaim.yaml nginx-service.yaml

configure lms-worker-claim1-persistentvolumeclaim.yaml openedx

|

接着开始部署:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

➜ openedx-docker git:(master) ✗ kompose up

WARN Restart policy 'unless-stopped' in service nginx is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service forum is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service lms is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service elasticsearch is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service xqueue_consumer is not supported, convert it to 'always'

INFO Service name in docker-compose has been changed from "xqueue_consumer" to "xqueue-consumer"

WARN Restart policy 'unless-stopped' in service smtp is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service memcached is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service mysql is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service mongodb is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service cms_worker is not supported, convert it to 'always'

INFO Service name in docker-compose has been changed from "cms_worker" to "cms-worker"

WARN Restart policy 'unless-stopped' in service xqueue is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service cms is not supported, convert it to 'always'

WARN Restart policy 'unless-stopped' in service lms_worker is not supported, convert it to 'always'

INFO Service name in docker-compose has been changed from "lms_worker" to "lms-worker"

INFO Build key detected. Attempting to build and push image 'regis/openedx:hawthorn'

INFO Building image 'regis/openedx:hawthorn' from directory 'openedx'

|

有几个容器需要在本地build,原因是,允许使用者定制instance

1

2

3

|

➜ ~ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

4fc8145fbb1f 7aa3602ab41e "/bin/sh -c 'apt upd…" 44 seconds ago Up 35 seconds hardcore_banach

|

国内网络慢成狗,我暂时不做定制化build,就用原始的镜像。这样一来镜像的确都部署上去了,速度很快

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

➜ openedx-docker git:(master) ✗ kubectl get po

NAME READY STATUS RESTARTS AGE

elasticsearch-6c95f49cd5-6hzg4 0/1 Pending 0 16m

frontend-68c74b6cff-mj5lh 1/1 Running 0 34m

lms-b894746f8-fntfp 0/1 Pending 0 16m

memcached-6558fbf75b-bnmrq 1/1 Running 0 16m

mongodb-79bc846f-f7ppv 0/1 Pending 0 16m

mysql-7968589598-52ll4 0/1 Pending 0 16m

nginx-7c47bb9849-tl6mj 0/1 Pending 0 16m

rabbitmq-686d48c4bb-lvqcx 0/1 Pending 0 16m

redis-master-74b5b4995f-mjzqq 1/1 Running 0 34m

redis-slave-75f5b85bd7-hsjx5 1/1 Running 0 34m

smtp-77849cc9c6-n5vpk 1/1 Running 0 16m

xqueue-5d6c9cccff-fcpvs 0/1 Pending 0 16m

xqueue-consumer-5976ffc456-7lnwm 0/1 Pending 0 16m

|

从READY一列看得出来,很多容器都出了问题,具体原因可以使用kubectl describe pods lms-b894746f8-fntfp查看,基本是数据卷相关的部分出了问题。

如果你想fix可以阅读Persistent Volume(持久化卷)

暂时弃用数据卷之后,几个容器都正常run起来了

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

➜ openedx-docker git:(master) ✗ kubectl get po

NAME READY STATUS RESTARTS AGE

elasticsearch-5b4d6dffb8-8nwr6 1/1 Running 0 2m

frontend-68c74b6cff-mj5lh 1/1 Running 0 58m

lms-7cfbc89fc-jbmfd 0/1 CrashLoopBackOff 4 2m

memcached-6558fbf75b-8j5zq 1/1 Running 0 2m

mongodb-684976884c-pclk5 1/1 Running 0 2m

mysql-85476d6b47-wcqqx 0/1 CrashLoopBackOff 4 2m

nginx-7cff5659c9-rk4n6 1/1 Running 0 2m

rabbitmq-666b58d6df-qrk4l 1/1 Running 0 2m

redis-master-74b5b4995f-mjzqq 1/1 Running 0 58m

redis-slave-75f5b85bd7-hsjx5 1/1 Running 0 58m

smtp-77849cc9c6-rnm68 1/1 Running 0 2m

xqueue-5d6c9cccff-fcpvs 0/1 Pending 0 39m

xqueue-consumer-5976ffc456-7lnwm 0/1 Pending 0 39m

|

openedx-docker管理open edx使用Makefile,本质上是操控容器。这部分要迁移到kubectl命令不难,参考

docker用户过度到kubectl命令行指南

诸如我们要进入容器,可以使用

1

2

3

4

5

|

➜ openedx-docker git:(master) ✗ kubectl exec -ti smtp-77849cc9c6-n5vpk -- /bin/sh

# ls

bin boot dev etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

#

|

补遗

在阿里云删除k8s集群之后,留意一下消费记录,有些启动的服务没被终止还会继续被扣费。比如NAT网关

参考